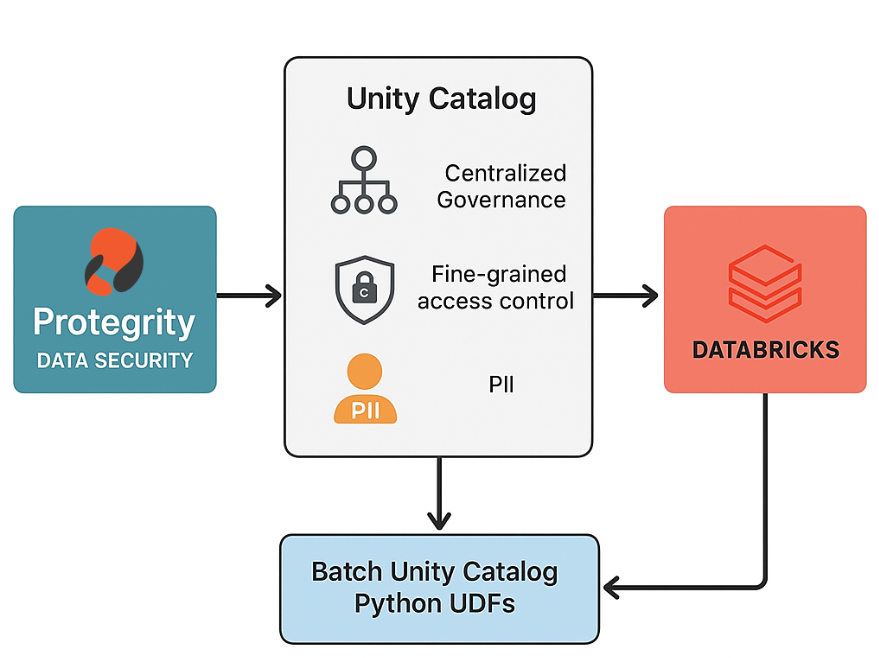

As enterprises increasingly use Databricks for analytics and AI, protecting sensitive data on the platform is paramount. Protegrity’s data protection integration with Databricks ensures that even sensitive data (like PII) can be analyzed in the cloud safely and in compliance with regulations. Two recent advancements make this integration especially powerful:

- Deep Integration with Databricks Unity Catalog – enabling centralized governance and fine-grained access control for protected data.

- Batch Unity Catalog Python UDFs – drastically improving performance by processing data in batches, so security doesn’t slow down your analytics.

By combining Unity Catalog’s unified governance with Protegrity’s vaultless tokenization and the efficiency of Batch Unity Catalog Python UDFs, organizations can achieve secure, scalable analytics without compromising speed or security.

Unified Governance via Unity Catalog

Protegrity integrates with Databricks’ Unity Catalog to use its user identities, data classifications, and policies. Sensitive data is tagged and protected automatically, and only authorized users can see clear data, ensuring consistent policy enforcement across all workspaces.

High-Speed Protection with Batch Unity Catalog Python UDFs

The integration uses Databricks’ new Batch Unity Catalog Python UDFs to batch-process rows. Instead of one API call per data value, thousands of values are transformed in one go, massively reducing overhead.

Figure 1: Protegrity Protector: Databricks Unity Catalog

Seamless Unity Catalog Integration for Governance

Databricks Unity Catalog is the unified governance layer for Databricks, providing central control over data access across notebooks, jobs, and SQL endpoints. Protegrity’s integration is designed to work hand-in-hand with Unity Catalog:

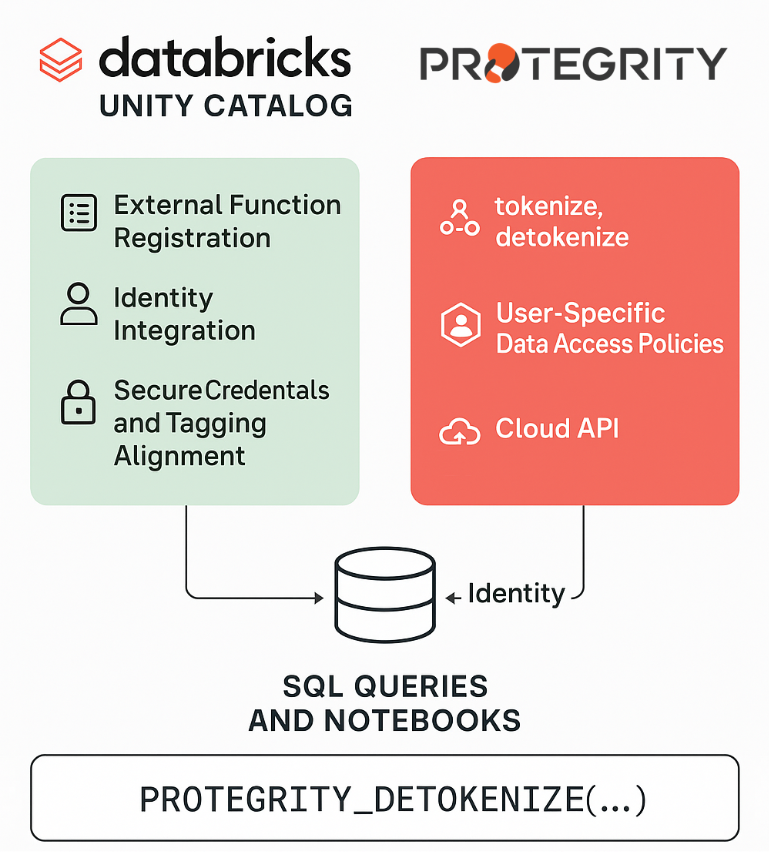

Figure 2: Protegrity Protector Details: Databricks Unity Catalog

- External Function Registration: Protegrity’s data-protection functions (like tokenize/de-tokenize UDFs) can be registered in Unity Catalog as Python UDFs. This makes them easily accessible in any SQL query or notebook, just like native functions. Data analysts can call PROTEGRITY_DETOKENIZE (column) in a query, for example, and Unity Catalog routes that to Protegrity’s UDF behind the scenes.

- Identity Integration (User Context): Unity Catalog ensures every query runs with a specific user identity. Protegrity leverages Databricks’ Integrated User Context feature – this means when a Protegrity UDF is called, it automatically knows which Databricks user is running the query. That user information is passed to Protegrity’s policy engine. No one can spoof or bypass this – Databricks attaches a secure token for the user context so “nobody can impersonate the user” in the protection requests. Protegrity’s Enterprise Security Administrator (ESA) then applies the correct data access policy for that exact user. If “Alice” runs a query, Protegrity checks Alice’s permissions before revealing any data, whereas if “Bob” runs the same query, the policy check is done for Bob. This tight coupling means data access policies are enforced per user, matching Unity Catalog’s governance model.

- Built-in Secure Credentials: Unity Catalog provides service credential, a secure way to access Protegrity Cloud API from Databricks and calling these API from the UDFs via Unity Catalog. This eliminates manual config. It’s seamless and secure: no hard-coded secrets.

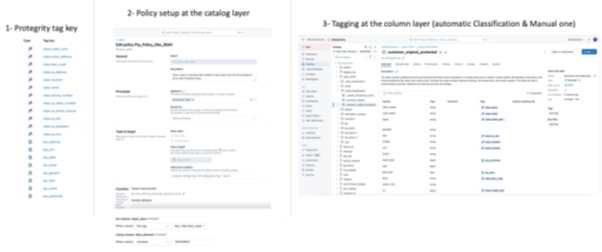

- Tagging and Classification Alignment: Unity Catalog offers data classification (currently in beta) and tagging. Protegrity can leverage these tags to drive its policies. For example, if Unity Catalog auto-tags a column as “Sensitive: PII”, you can have a Protegrity policy that automatically detokenizes that column for all but a few roles/users. Unity Catalog’s new Attribute-Based Access Control (ABAC) (currently in beta) ties access to data attributes/tags and Protegrity Python UDFs through Databricks policy. In short, the integration is built to respond to Unity Catalog’s governance metadata – making it easier to scale protection. If a new table is created and classified as sensitive, a policy in Protegrity can immediately ensure that data is only accessible via the appropriate UDF, without manual intervention.

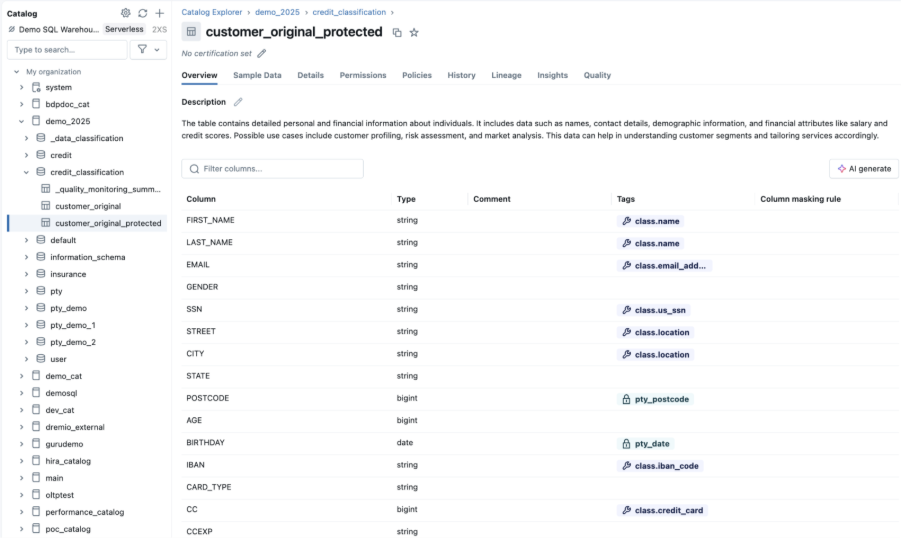

Figure 3: Protegrity Tagging – Unity Catalog

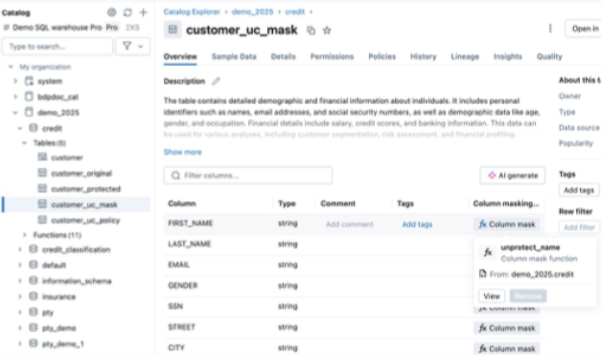

- Masking Rules via SQL UDFs: Unity Catalog allows defining column-level masking rules. The integration requires to define a SQL wrapper function that calls the Protegrity UDF, and use that in the masking rule. This is straightforward and in the future Databricks may enable direct Python UDF usage in masking rules.

Figure 4: Protegrity Masking Rule – Unity Catalog

- Despite this, many customers simply use secure views or SELECT statements with the Protegrity UDF embedded, achieving the same end result.

The key point is that whether via views, masking rules and policies, Unity Catalog + Protegrity can dynamically protect/unprotect data per user across the lakehouse.

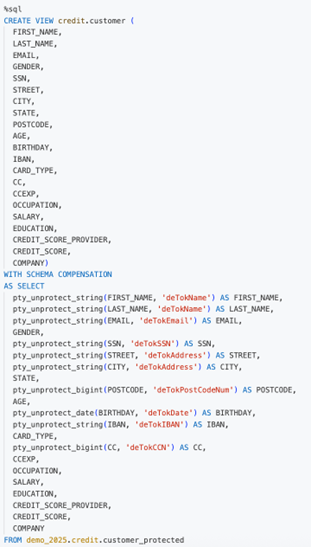

Figure 5: Protegrity View – Traditional Implementation

- Consistent Policy Enforcement Across Workspaces: Because Unity Catalog is account-wide, once Protegrity’s UDFs and policies are set up, they apply anywhere – Databricks SQL warehouses, standard clusters – everything uses the same governed definitions. For example, if a column is restricted in Unity Catalog, any attempt to query it on any cluster will invoke Protegrity’s protection function. This consistency gives peace of mind that there are no “back doors” or forgotten datasets; governance is uniformly enforced. Protegrity logs each access too, so you gain a centralized audit trail.

Example: Imagine a table credit.customer_protected with PII columns already protected earlier in the data flow: customer’ first_name, last_name, email and more. Unity Catalog’s auto-classification tags customer first_name, last_name, email and more as PII. A Protegrity policy is configured that those tags should be kept protected for all users except those in the “DataSteward” group.

In Unity Catalog, you will need to follow these steps:

- Pre-requisites:

- Register Protegrity’s detokenize() UDFs (Part of initial deployment phase of Protegrity Databricks Protector)

- Databricks Tag key created by default / Create Protegrity Tag key – At Databricks UC layer (if required)

- Create Databricks Protegrity Policy (catalog layer)

- Run Databricks Classification and do a manual check to ensure column that will require data unprotection are tagged adequately

- Now, an analyst querying credit.customer_protected will trigger Protegrity’s UDF; if the analyst is not a DataSteward, the UDF will return protected names (tokens), but if a Data Steward queries, the UDF returns real names. Since data is protected at rest and by default even if there is a misconfiguration of Unity Catalog. It will ensure even with a bypass of the UDF, PII data will be protected. The analyst sees deidentified data, the steward sees real data – both from the same table, one policy, all managed centrally. This highlights the power of coupling Unity Catalog governance with Protegrity’s data-centric security.

Figure 6: Protegrity Implementation – Databricks ABAC

Overall, integrating with Unity Catalog means simpler deployment and stronger security. There’s no need to manage separate user accounts or mappings – Databricks user accounts are recognized by Protegrity. There’s no drift between what Databricks thinks a “sensitive column” is and what Protegrity protects – using tags and policies, they’re aligned. And administrators can use the familiar Databricks Unity Catalog interface to manage data access, while Protegrity works behind the scenes to enforce the protection aspect. It’s a “better together” story: Unity Catalog provides the who/what governance, Protegrity provides the how (tokenize/mask) enforcement.

Finally, it’s worth noting this integration approach is forward-looking. As Databricks rolls out new governance features, Protegrity will integrate those. The partnership has already checked that the core integration works on Unity Catalog-enabled compute. With Unity Catalog becoming the default for new Databricks workspaces, this integration ensures security is built into the foundation of your Lakehouse.

Benefits and Future Outlook

By focusing on Unity Catalog integration and performance through vectorized UDFs, the Protegrity + Databricks solution offers several tangible benefits:

- Stronger Security Posture: All sensitive data in Databricks can be governed and protected by policy. Unity Catalog integration ensures no data “falls through the cracks” – if it’s governed in Databricks, it’s protected by Protegrity. This greatly reduces the risk of accidental exposure. Even if someone gains access to the data lake files or attempts an unauthorized query, they’ll only see tokenized data. The integration essentially brings Zero Trust security into the Databricks environment: every data access is verified, and nothing sensitive is shown by default.

- Regulatory Compliance & Auditing: Many regulations (GDPR, HIPAA, PCI DSS, etc.) mandate controls like pseudonymization, encryption, and auditability. This solution hits all those marks. Data is pseudonymized via tokenization (satisfying GDPR’s recommendations for protecting personal data). Strict access controls and masking ensure compliance with “least privilege” access required by frameworks like HIPAA. Detailed logs of who accessed or decrypted what data are available for compliance audits. Using Unity Catalog’s classification plus Protegrity’s enforcement, companies can demonstrate that, for instance, “all EU personal data is masked unless you’re in EU finance team,” and prove it with logs. This is a powerful story for compliance officers and could significantly shorten audit cycles.

- Ease of Use for Data Teams: From the user perspective (analyst, data scientist, etc.), there’s little to no friction. They might just query a different view (which is documented as containing protected data), or see a slight difference in how results appear (protected values for some fields). There’s no need to run special tools or go through lengthy approvals for each dataset – once the integration is in place, using protected data is as simple as using any other data. This encourages more data-driven collaboration. Teams don’t have to wait weeks for a DB admin to manually anonymize a dataset; they can query it directly (with Protegrity doing the unprotection on the fly). We often hear that data accessibility and security are at odds; this solution bridges that gap, making it actually easier to access data securely.

- Future-Proof Architecture: Both Databricks and Protegrity are continuing to enhance this integration. On the roadmap is even tighter Unity Catalog integration. Databricks is also working on Delta Sharing & Clean Rooms, and Protegrity is looking into how tokenization can apply there so that shared data remains protected by default.

- Joint Support and Collaboration: Since this solution is born out of a partnership, customers benefit from joint support. Databricks Solution Architects and Protegrity Engineers have a direct line of communication. Already, they have worked together to solve issues like credential management and to refine user context passing. Going forward, as new needs come up (say, support for a new Databricks feature or a new encryption standard), customers can expect a coordinated response.

Conclusion: The combination of Unity Catalog integration and vectorized UDF performance makes Protegrity’s Databricks solution both secure and enterprise-grade. Data governance admins get fine-grained control and central oversight, security teams get strong protection and compliance assurances, and data users get access to the data they need with minimal delay. It exemplifies the ideal of “secure data democratization”: everyone can use data freely, but within guardrails that protect privacy and IP.

For organizations with a lot of sensitive data, this means they no longer have to silo that data away from Databricks. They can bring their most sensitive workloads onto the lakehouse, confident that Protegrity + Unity Catalog will enforce the necessary protections. Use cases that were challenging before – like analyzing customer behavior across regions (with differing privacy laws), or doing ML on healthcare data – become feasible in one platform. And they can do it at scale, as fast as their other analytics.

In essence, Databricks has become a safer place for sensitive data. By integrating Protegrity’s tokenization through Unity Catalog and accelerating it with vectorized UDFs, the platform can truly claim to enable both open data collaboration and strict data privacy at the same time. This synergy between Databricks and Protegrity is an excellent example of how partnerships can solve the age-old tussle between data utility and data security: here, you get the best of both. Organizations that implement this will be at the forefront of using cloud data analytics responsibly and effectively, turning what was once a risk into a competitive advantage.