Originally presented at Gartner Data and Analytics 2025 in London

At Protegrity, we focus on securing the most important asset of all, the data itself. As a Solution Architect, I have the unique privilege of working with leading finance and retail organizations in EMEA. I get to see what kinds of data architectures they’re building, how they are automating their operations, and trying to get the most out of AI and analytics. What they’re aiming to achieve, where they’re failing, and where they are succeeding.

This article is a collection of lessons learnt from our clients. I’ve grouped them under the headline of When Data Security Becoming a Strategic Advantage because that’s the shift we’re seeing in the industry. Cybersecurity is no longer just a compliance checkbox, it’s grown to be a strategic non-negotiable. As put by Peter Kyle, the British Secretary of State for Department for Science, Innovation and Technology, cybersecurity is a critical enabler of economic growth, fostering a stable environment for innovation and investment.

Today’s reality is that any business operating in the digital space is under constant, relentless attacks from cybercriminals. The attacks of more catastrophic magnitude are the ones that make the headlines, like the hack that brought Marks and Spencer operations to a halt in April this year. M&S had to shut down online orders for 10 days, and saw nearly £600 million wiped off their market value.

The companies you don’t hear about are the ones that built data protection into the fabric of their systems. Many of them are Protegrity clients. In this article, I’ll attempt to explain how our customers have successfully used data security as the foundation for achieving operational resilience, cutting costs, and powering business growth.

What We Want vs What We Have

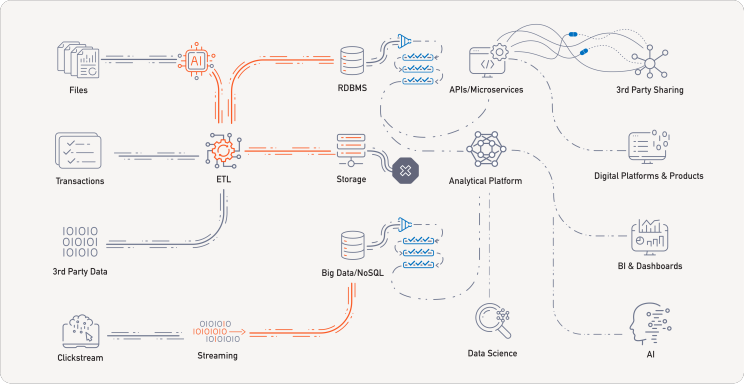

Let’s start with a little peak under the hood. What every business we talk to wants is a platform that can support their operations without friction, where the right data reaches the right people exactly when it’s needed. Such ideal system is performant, scalable, agile (and ideally inexpensive).

What we have can be very different from that vision. It is my experience that it doesn’t matter whether you’re building from scratch or inheriting decades of technical debt, most data architectures – at least to an extent – resemble the plumbing in a hundred-year-old house. Without any doubt there’s some beautifully laid copper piping in there, but poke around and you’ll find leaks, blockages, and more than a few parts are held together with duct tape.

These are exactly the top-of-mind challenges our clients had to overcome, and what I’ll focus on in the next sections. We’ll look at fixing leaks – or preventing data breaches, resolving blockages – i.e. automating data access, and getting rid of the duck tape – unlocking data for sharing.

1. Preventing Data Breaches

Strategic advantage through business resilience

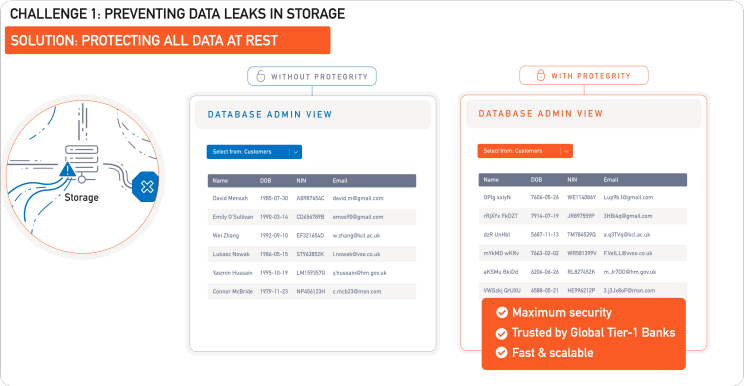

Let’s start with data breaches. This is where most traditional InfoSec programs focus, building walls around data as a way of securing it. That’s your endpoint protection, application security, DLPs, WAFs, VPNs, and others. These are all hugely important, but often overlooked issue with this approach is that once an attacker gets past those walls, there’s nothing left between them and the raw data, typically stored in the clear.

That’s the anatomy of a data breach.

Now, the best way to avoid that would be not to store any sensitive data at all, but that’s not exactly a realistic option. Businesses need data to operate. So the next best thing is to replace sensitive data with a reversible substitute, for example a token. Techniques such as tokenization let you do exactly that, effectively removing the original data from the environment. Call it the ultimate data protection method.

If someone breaks in, what they’ll find is complete gibberish. The new values will only resemble their previous selves, storing unlikely combinations of characters and numbers or dates where the real thing used to be.

This concept becomes extremely powerful at scale. Consider that some of our clients have hundreds of databases and thousands of applications. That entire stack is a potential attack surface. But with data tokenized at rest, and inaccessible through the commonly exploited database admin, devops or developer roles, the risk of a data breach is dramatically reduced.

This is where data security becomes a strategic advantage. By providing the ultimate protection over data and reducing the threat surface, it enables the business to stay resilient in the hostile digital space. Even in the face of a breach, the data stays safe, the operations continue, customers are protected, and the business stays off the front page.

Side notes:

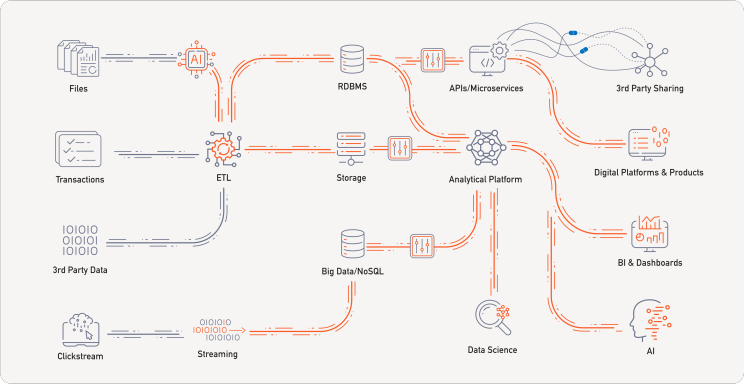

Without getting into too much technical detail, the idea is that data protection should be applied as early as possible, ideally at the point where data enters your environment. That means integrating protection directly into ETL pipelines and streaming processes, so that sensitive data is never exposed in the clear as it moves through your systems.

What we are seeing our clients do more and more often is leveraging GenAI to allow for unstructured data like text files to be ingested into environments. LLMs are far superior at understanding the language and context than traditional methods, even the most elaborate RegEx. The models can detect and classify sensitive information inside documents and messages with high accuracy, and feed that information to services that trigger protection. GenAI paired with tokenization eliminates the manual effort involved and vastly reduces risk.

2. Accelerating Data Access

Strategic advantage through reduced compliance processing

The next typical challenge is access: specifically, how long it takes for people to get the data they need to do their jobs.

In 2024, we commissioned a survey to understand just that. We knew access to data was a pain point, but the results were still a surprise: the study showed that only 2 percent of companies were access sensitive data within a week. For the majority, the wait time ranged from one to six months. I’ll bet that if you’re working for a bank, this will resonate.

Risk and compliance are the – rather predictable – culprits. Before anyone is allowed to see sensitive data, their request has to move through a multi-layered approval process. Depending on how the data is classified, it might require sign-offs from several teams. As we have learnt, that process can take months. And even then, the request might still be declined, forcing the person to try again or accept defeat.

This is where data security becomes a strategic advantage. Given that we can’t just remove compliance, what’s the next best thing? It’s automating it, translating what needs to be done manually into something the code can do.

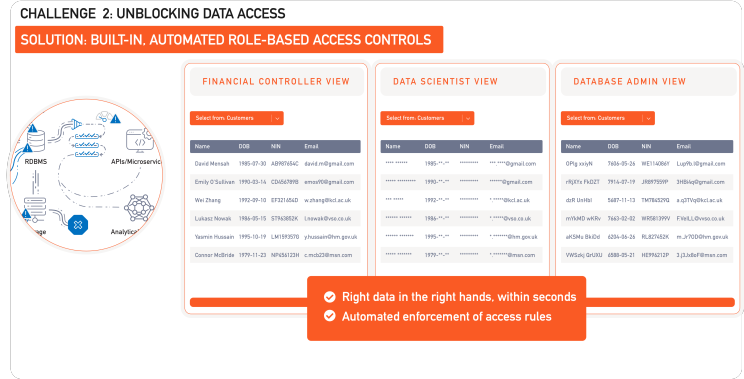

That programmatic answer are the Role-Based Access Controls, managed centrally through a policy, but enforced locally on the database or application. RBAC grant you central control over how the data is used and by whom. A single policy can manage the entire environment, across platforms, Clouds, data stores and apps, bringing them to the same level of security and oversight. It’s governance through code: as your policy changes, the rules are enforced automatically and consistently across your environment.

With RBAC you can decide that a data scientist role may be given access to location and age data for their analysis – but not names or national IDs, as these carry little analytical value whilst their exposure is considered high-risk. A developer or database admin doesn’t need access to any real data at all, hence closing the typical avenue for exploits. Everyone gets the level of access they need, and nothing more.

Again, this solution becomes powerful at scale: no matter the breadth of your environment, a centralized security pane provides you a consistent, technology-agnostic way to govern access to sensitive data. You’re building a control framework over your data.

We have customers that manage their systems across the globe with a single security policy administered from the HQ. One of the global banks we work with has a team of 2 people in New York that oversees the system and sets the standard policy for all entities, allowing for small regional adjustments depending on the local regulation. Another client of ours had used the system to build what they called a data supermarket. In their model architecture, analysts could browse available datasets, add them to a cart, and “check out”, with access levels enforced in real time based on their role. It reduced the Client’s approval chains from weeks to seconds.

This is the strategic advantage: a clear governance model that translates manual rules to programmatic access controls. The right data in the right hands, ASAP. Ultimately, it’s not only cutting the time and cost of the compliance process; it’s also producing the insights quicker, which leads to business growth.

3. Enabling Secure Data Sharing

Strategic advantage through data monetization

The third challenge that is becoming more prevalent in the industry, is data sharing. Given how interconnected today’s economy is, the capability of sharing data with partners, suppliers, or customers, is as much an opportunity for the business, as it is a fundamental need.

The problem is that most data sharing today is still very much a DIY exercise: one-off, and hard to repeat. Every project is custom: the data is pulled, wrangled, reviewed and shared through ad hoc processes. When a similar request need comes up again, the whole cycle starts from scratch.

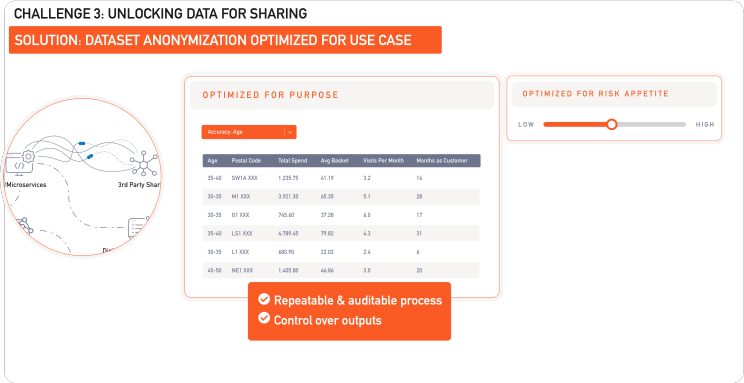

The opposite of that process is data sharing that is repeatable, scalable, and secure. This is exactly the promise of data anonymization. In a nutshell, anonymization removes direct identifiers, such as people’s names or passport numbers, and generalizes quasi-identifiers (which together can identify a person). A 35-year old customer is a customer belonging to a 35-45 age bucket after anonymization does its magic. The goal of the process is to prevent re-identification of the data subjects, while preserving the utility of the data as much as possible.

Where anonymization becomes truly powerful is when it can be run at scale, with configurable outputs and measurable risk. Using tools like Protegrity’s Anonymization API, businesses are given control over how they want to share their data, depending on the use case. The system can be told to preserve the analytical utility of a specific attribute, say age, in turn slightly lowering the precision of other data points to keep the dataset secure as a whole. That 35-year-old customer would now be a part of a smaller, more suitable bucket of 35-to-40 year-olds, perhaps. The system can also measure the risk associated with the generated dataset, providing a quantitative assessment of the likelihood of re-identification. This allows the business to make informed decisions based on their risk appetite. If the data is being shared publicly, your appetite for risk is minimal. If it’s being shared with a trusted partner, you might dial up utility and accept a slightly higher risk profile.

This is another, and final example where data security becomes a strategic advantage. Anonymization, when governed by risk and utility controls and provisioned in a repeatable framework, is the best solution for unlocking data for collaboration and monetization.

Have It All

Our clients are proving that data security can be a strategic advantage. When it’s built into the system foundation, it can truly transform the business. It’s the core ingredient of operational resilience, it enables faster decision making by automating access to data, and unlocks safe collaboration and monetization of data. These are the practical benefits that go beyond what is traditionally associated with security, or the outdated perception of it being just a cost center.

At Protegrity, that’s what we’ve been focused on for over 25 years. Many of our clients have been with us for just as long. We’re proud to be a small part of building some of the most resilient and performant systems in the industry.