Protegrity & Databricks

Protegrity Native

The integration is native to Protegrity and offers a more seamless experience when applying this platform.

Integration type

- Analytics

Partner

Yes

Supported platforms

- AWS

- Azure

- GCP

Use cases

- Agentic Pipeline Protection & Runtime Enforcement

- Cloud Migration & SaaS Integration

- Internal Data Democratization & External Data Sharing With Partners/Vendors

- Privacy-enhanced Training Data for AI/ML Models

- Prompt Input Filtering & Output Curation for GenAI Systems

- Regulatory Compliance & Data Sovereignty

overview

In the age of AI, data is no longer just an asset—it’s the foundation of intelligence. As enterprises accelerate their adoption of platforms like Databricks for analytics, machine learning, and GenAI, the need for scalable, secure, and compliant data protection has never been greater. This integration ensures that your data is protected wherever it’s stored and processed inside Databricks through Databricks Unity Catalog integration starting from standard notebook until Genie features, natural language query service.

Key Integration Feature

Protegrity’s integration with Databricks delivers enterprise-grade data-centric security, enabling organizations to protect sensitive information across the entire data lifecycle—from ingestion and transformation to AI model training and GenAI orchestration. This partner page outlines how Protegrity empowers Databricks users to unlock the full value of their data while maintaining integrity, privacy, and compliance.

Features & Capabilities

Protegrity’s integration with Databricks delivers enterprise-grade data-centric security, enabling organizations to protect sensitive information across the entire data lifecycle—from ingestion and transformation to AI model training and GenAI orchestration. This partner page outlines how Protegrity empowers Databricks users to unlock the full value of their data while maintaining integrity, privacy, and compliance.

01

Vaultless Tokenization: Replace sensitive data with format-preserving tokens that maintain analytical utility.

why it matters

Eliminate the latency and scalability bottlenecks of traditional lookup tables while ensuring that protected data retains its original format (e.g., length and type), allowing downstream applications and analytics to function without breaking.

HOW IT WORKS

A major retailer uses vaultless tokenization to protect credit card numbers; because the tokens pass standard Luhn validation checks, the QA team can use production-grade data for testing billing systems without ever exposing real customer financial details.

02

Batch Unity Catalog Python UDFs: High-performance protection via vectorized functions that process thousands of values in a single call.

why it matters

Drastically reduce processing time for massive datasets by minimizing function-call overhead, enabling data teams to secure or de-tokenize millions of records within tight SLA windows.

HOW IT WORKS

A healthcare analytics firm runs nightly ETL jobs on millions of patient records; by switching to vectorized Python UDFs, they reduced their encryption processing time from 4 hours to under 20 minutes.

03

User-Aware Policy Enforcement: Policies are dynamically applied based on the identity of the user executing the query.

why it matters

Enforce strict “need-to-know” access principles by automatically adjusting data visibility in real-time, allowing a single dataset to safely serve multiple departments with different clearance levels.

HOW IT WORKS

When querying a unified employee table, a Regional Manager sees the full names and salaries of their direct reports, while a Data Scientist querying the exact same view sees only tokenized IDs and masked salary ranges for trend analysis.

04

Credential-Free Secure API Access: Unity Catalog provides secure service credentials for seamless API calls to Protegrity’s Cloud Protect.

why it matters

mprove security posture and developer productivity by removing the need to hardcode secrets or manage manual API keys within scripts and notebooks.

HOW IT WORKS

Data engineers enable an automated pipeline where Unity Catalog handles the authentication handshake with the protection service behind the scenes, preventing accidental exposure of credentials in code repositories or logs.

05

ABAC & Masking Rules: Attribute-Based Access Control and masking rules enforce granular access policies using Unity Catalog tags.

why it matters

Scale security governance efficiently by attaching policies to metadata tags (e.g., “PII” or “Confidential”) rather than individual columns, ensuring consistent protection across thousands of tables instantly.

HOW IT WORKS

A financial institution tags all social security numbers across 500 tables as “Sensitive-PII”; a single ABAC rule instantly enforces masking on every tagged column for any user who does not possess the “HR-Admin” attribute.

Architecture &

Sample Data Flow

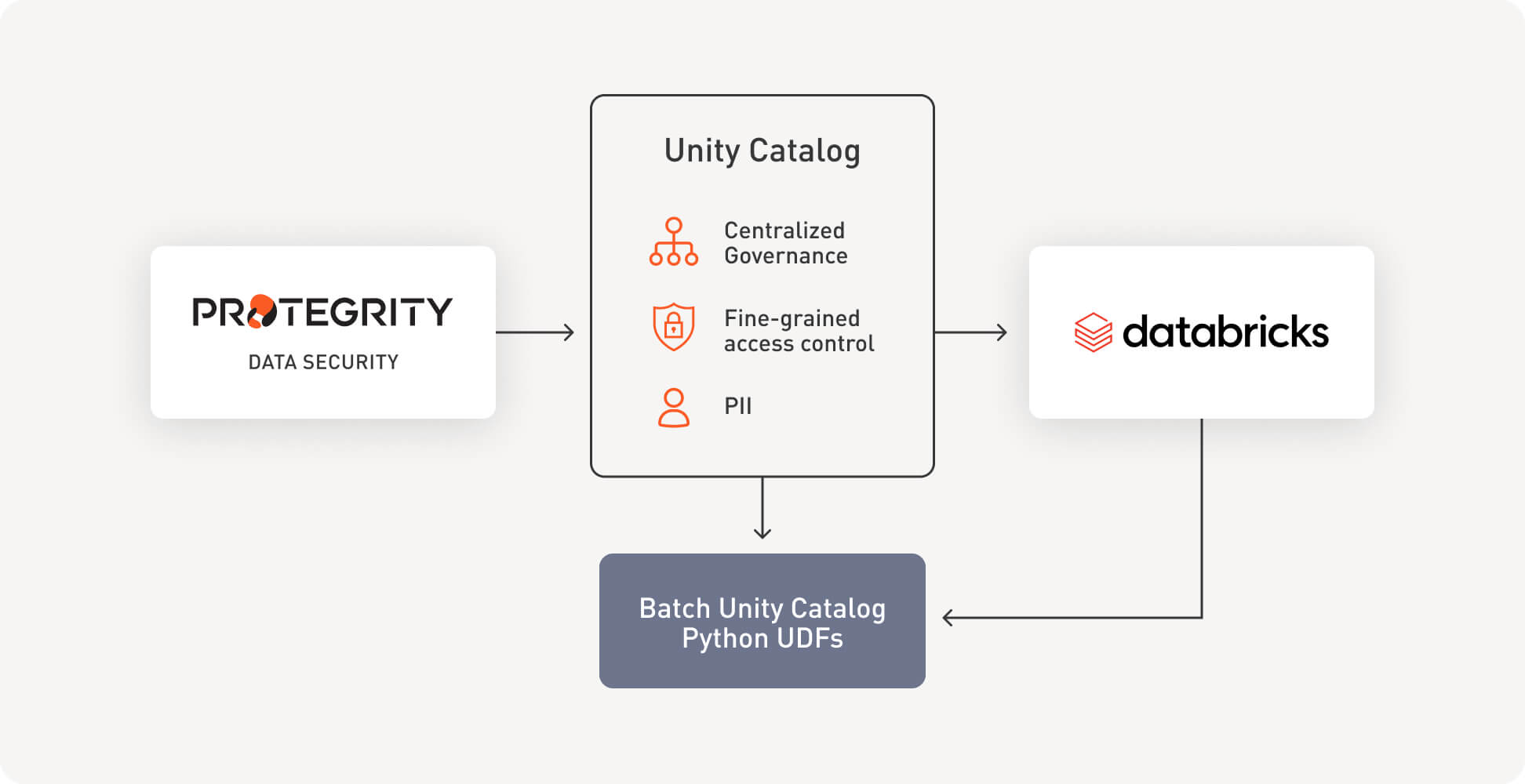

Unity Catalog provides centralized access control, auditing, lineage, and data discovery capabilities across Databricks workspaces. Protegrity can leverage Unity Catalog to automate the data protection steps being used within Databricks.

This integration ensures that sensitive data is protected across Databricks compute types and across all stages of the data lifecycle.

The data journey

Visualizing the data journey

Use Cases

Examples where Databricks has helped achieve a business goal.

Healthcare

Securing Customer Data Across Distributed Systems

Challenge

Enterprise Governance & Compliance Requirements: The client (a major medical devices enterprise) required proof of enterprise-grade data governance, HIPAA compliance, and a highly scalable architecture. The challenge was to seamlessly integrate our solution into their modern data stack while enforcing dynamic, user-based policies and secure handling of sensitive data.

Solution

We designed the solution to highlight all critical capabilities required by the client. Key elements of our solution included:

- Centralized Data Governance: Integration with Databricks Unity Catalog for a unified governance layer.

- PolHealthicy-Controlled Compute Environment: Use of Standard Clusters (with Databricks cluster policies) to run collaborative analytics workloads in a governed way.

- Role-Based Access & Masking: Implementation of strict role-based access control (RBAC) following the principle of least privilege. Only authorized user roles could access certain data.

- Secure Data Storage with Delta Lake: All sensitive data used in the demo was stored in Delta Lake tables under Unity Catalog governance. This leveraged Delta’s ACID transactions, versioning, and lineage features to ensure data integrity and easy auditing of changes—crucial for regulated health data.

- Serverless Scalability: Showcased serverless scalability using vectorized Python UDFs (Pandas UDFs) within the platform.

- Dynamic User-Aware Enforcement: Demonstrated dynamic, user-aware policy enforcement embedded in the data workflows.

Result

We conclusively demonstrated that our platform meets the healthcare company’s requirements for data governance and security:

- Proven HIPAA Compliance & Governance: The client saw their compliance requirements (HIPAA and internal governance standards) fully met. Sensitive healthcare data was properly classified, protected, and accessible only to authorized roles, satisfying their auditors and compliance officers.

- Enterprise-Grade Platform Maturity:

- Broad Scalability Across Environments:

- Differentiation Over Competitor:

AI & GenAI:

Securing the Intelligence Pipeline

As enterprises embrace GenAI, new risks emerge—gradient leakage, memorization, prompt injection, and hallucinated outputs. Protegrity addresses these with a secure AI pipeline that embeds protection at every stage:

1. Model Training

Example: A fraud detection model uses real transaction data. Protegrity ensures PII and PCI data is protected before training, preventing leakage and ensuring compliance.

2. Agentic Layer

Example: GenAI agents handling insurance claims are protected from leaking PHI through real-time tokenization and context-aware guardrails.

3. Generative Responses

Example: Retail GenAI systems generating personalized messages are protected from exposing customer data or hallucinating unsafe content.

4. Secure Text-to-Analytics

DEPLOYMENT

Protegrity supports a wide range of Databricks environments and deployment models:

environments & models supported

- Unity Catalog Object Support

- ABAC Integration (Beta)

- Masking Rules (via SQL wrapper)

- Cloud Platforms (AWS, Azure, GCP)

Deployment options include:

- Views: Manual configuration for sensitive columns.

- Masking Rules: Column-level masking via SQL UDF wrappers.

- ABAC Policies: Automatic enforcement based on tags and attributes.

Databricks + cloud providers

Databricks Data Flow is frequently utilized alongside leading cloud providers, including AWS, Azure, and GCP. In this context, we present a representative example involving Microsoft Azure and Fabric.

- Protection Option A: Data Protection within ETL tasks (on-premises and/or cloud)

- Protection Option B: Data Protection within Databricks Pipeline

- Unprotection Option A: Data Unprotection through Databricks UC Batch Python UDFs.

- Unprotection Option B: Data Unprotection within Fabric.

RESOURCES

Quick reads and deep dives to help your team plan, deploy, and scale Protegrity with Databricks—securely and without slowing analytics or GenAI.

Databricks and Protegrity Solution Brief

Discover how Protegrity’s data protection solutions, combined with AWS and Snowflake, enabled a leading insurer to scale data analytics securely and efficiently.

READ MORECan Your Current Architecture Handle Secure, High-Speed Analytics on Databricks?

Unified governance with Unity Catalog + Protegrity.

READ MORE

Strategic Alignment with Databricks

Protegrity’s capabilities align with Databricks’ 2025 priorities:

- Winning with Unity Catalog: Protegrity’s integration ensures secure governance across all Databricks workspaces.

- Enterprise AI Enablement: Supports secure model training, agent orchestration, and GenAI pipelines.

- Secure Data Intelligence: Embeds protection into the Lakehouse, enabling safe analytics and innovation.

Future integrations will extend to new Databricks services, ensuring that shared and collaborative data remains protected by default.

Frequently

Asked Questions

Here are five common questions related to the integration, deployment, and features of the Databricks and Protegrity solution, with their answers:

Protegrity supports Single, Standard clusters and SQL Warehouse. Protegrity Databricks protector is based on Batch Unity Catalog Python UDFs.

Protegrity Data Protection supports on-premises, hybrid, and multi-cloud environments both within and beyond Databricks. Protegrity platform can be deployed on-premise using Gateways or SDKs in several form factors (e.g, VMs and containers). For data protected in Databricks but used elsewhere (e.g., Fabric), Cloud Functions, On-premises service can enable data unprotection (e.g Fabric UDFs).

Protegrity supports vaultless tokenization, encryption, masking, hashing, and format-preserving encryption, all centrally managed in ESA. Policy and key management, separation of duties, and detailed reporting simplify compliance and governance. For more information, head to: Methods of Protection>

Our customers will benefit from:

- Seamless data protection integration with Databricks via UC configuration.

- Improved performance through cloud functions.

- Consistent data protection inside and outside the Databricks environment.

Please refer to the Integration section.

See the

Protegrity

platform

in action

Experience the transformative power of the Protegrity and Databricks partnership—a seamless integration that elevates secure, scalable, and compliant data intelligence to new heights.

Get an online or custom live demo.